When I start a new software project, I define my test concept early. It’s more than a habit—it’s a smart strategy that ensures structured, scalable, and reliable testing. Without a clear concept, even simple plans can collapse into confusion: test cases get lost, roles overlap, and bugs go unnoticed. In this article, I’ll explain how I design a complete test concept, what key chapters it should include, and how this structure builds the foundation for efficient and high-quality software testing.

As a result, you’ll:

- Avoid critical test coverage gaps

- Reduce defect leakage into production

- Increase team coordination and test efficiency

- Deliver higher quality software—on time and with confidence

Let’s dive in and build a testing concept that works before the first bug even shows up.

Start With the Test Objectives

First, I define the test objectives—a crucial step in aligning testing with overall software project goals. What do I want to verify or validate? This might include functional correctness, performance stability, or user experience quality. In agile and DevOps environments, objectives might even extend to deployment reliability or CI/CD pipeline performance (CI = Continuous Integration, CD = Continuous Deployment/Delivery). I make these objectives measurable. For instance, if I test performance, I define how fast a feature must respond—say,

a page must load in under two seconds under normal load.

Clear objectives help synchronize the efforts of QA engineers, developers, and project managers throughout the development lifecycle.

Define the Test Strategy and Test Levels

Next, I decide on a test strategy. This defines how I will achieve the objectives within the constraints of time, budget, and resources. Will I use manual testing, automated tools, or both? Will I adopt a shift-left approach to catch defects early? I then break the process into test levels—

- unit,

- integration,

- system, and

- acceptance tests.

Each level aligns with a phase in the software development life cycle (SDLC). For example, unit tests, typically written by developers, check individual functions. Integration tests verify that modules work together. System tests validate the end-to-end workflow. Acceptance tests ensure that business requirements are met and often involve stakeholders or clients.

Identify the Test Objects

Now I ask: What exactly am I testing? These are my test objects. They include software modules, APIs, databases, or user interfaces. In complex software projects, this could also mean microservices, containerized applications, or even cloud infrastructure configurations. I list them clearly and specify versions or build numbers. That ensures everyone on the team—from developers to product owners—understands the testing scope and environment setup. It also supports traceability and helps when integrating with tools like JIRA, TestRail, or Azure DevOps.

Select the Test Types

Then, I define the test types that best align with the software’s risk profile and business impact. These could be

- functional tests,

- performance tests,

- usability tests,

- accessibility tests, or even

- security audits like penetration testing.

For instance, if I’m working on an online shop, I’ll run usability tests on the checkout process, ensuring smooth UX. At the same time, I’ll conduct load tests on the product search API using tools like JMeter or Locust. In regulated industries, I might include compliance testing (e.g., HIPAA, GDPR). Choosing the right mix of test types increases confidence in both technical and business quality.

Specify the Test Coverage

I never skip test coverage planning. I map each test case to

- user stories,

- functional requirements,

- or risk areas.

This ensures that critical paths and potential failure points are tested. I might say:

“We will test all login paths, including forgotten password and OAuth login.”

This approach prevents blind spots. In larger software projects, I use requirements traceability matrices (RTMs) to ensure each requirement has matching test cases. Coverage metrics—like statement coverage, branch coverage, and requirements coverage—guide continuous improvement and stakeholder reporting.

Create a Test Case Overview

Once I know what to test, I list all planned test cases. I group them by

- software module,

- test type, or

- business priority.

For example, I might start with payment processing test cases, followed by user registration and role management. In agile environments, I align test cases with sprints and user stories. A clear overview helps me estimate effort, assign tasks, and manage timelines. When test cases are linked to project management tools, I can track execution status in real time and quickly identify bottlenecks or regressions.

Evaluate the Test Objectives and Coverage

I regularly evaluate whether I’m meeting my test objectives. I use test coverage metrics, defect leakage rates, and test execution trends. If tests don’t catch expected bugs or deliver inconsistent results, I refine them or update the test data. I also check for untested areas—especially after last-minute code changes or hotfixes. This evaluation loop supports continuous testing and contributes to DevOps feedback cycles. Regular retrospectives and reviews help align test efforts with project milestones, keeping the team on track and the product on quality.

Build a Reliable Test Framework

A structured test framework is the backbone of any successful QA process (QA = Quality Assurance). In software development projects—especially large-scale or agile ones—a well-defined framework ensures

- consistency,

- repeatability, and

- scalability.

It supports both manual and automated testing and helps integrate testing into the overall DevOps pipeline. A robust framework improves communication, defect tracking, and test reuse across teams and releases.

Test Preconditions

I clearly state what preconditions must exist before I can begin testing. These include technical setups and functional dependencies. For example, I might need a specific version of the database, a configured test environment, access to staging servers, or completed user stories from the current sprint. Preconditions may also include test data readiness, network configurations, or even test accounts. Documenting these helps prevent wasted time due to missing setups and ensures tests reflect realistic conditions seen in production environments. It also facilitates smooth integration with CI/CD pipelines by automating environment verification.

Defect Classification

Next, I define defect categories to streamline triage and resolution. I classify issues by severity (e.g., critical, major, minor) and sometimes priority. This helps teams focus on the most impactful bugs first, which is vital in fast-paced agile and continuous delivery workflows. A critical defect might block user registration, while a minor one could be a cosmetic issue in the footer. Clear defect classification improves communication between testers, developers, and product owners, and supports root cause analysis during retrospectives. In some software projects, I also tag defects by type (e.g., logic error, integration issue, performance bottleneck) to uncover systemic weaknesses over time.

Entry and Exit Criteria

I also set entry and exit criteria for each test phase. These are formal conditions that define when a phase can start and when it is considered complete. For instance, I might say:

“System testing begins when integration tests pass with less than 5% failure rate and all critical defects are resolved.”

These criteria prevent premature testing and ensure quality gates are respected across the SDLC. They align testing milestones with development sprints, release cadences, and release readiness checklists. For example, exit criteria for system testing might include:

- All critical and major defects resolved

- 90% test case pass rate

- 100% execution of high-priority test cases

Defining these criteria improves release confidence, helps with regulatory compliance, and reduces the risk of last-minute surprises before go-live.

Design a Stable Test Environment

To ensure repeatability and reliability in test results, I design a stable test environment that mirrors the target production system as closely as possible. In software development projects, especially those using agile, DevOps, or microservices architectures, consistency in test environments is essential for identifying issues early and accurately. A poorly defined or unstable environment can lead to flaky tests, false positives, and wasted debugging time.

Define Environment Components

I start by defining all components of the environment in detail. This includes:

- Operating systems (e.g., Windows 11, Ubuntu 22.04)

- Databases (e.g., PostgreSQL, MongoDB)

- Middleware (e.g., Apache Kafka, Redis)

- Third-party APIs or SDKs (e.g., payment gateways, authentication services)

Each component is version-controlled and documented. This ensures developers and testers are aligned on what configurations to use. In projects with multiple teams or global contributors, this reduces environment drift and supports consistent builds and deployments.

Document Network Conditions

I also document and replicate network conditions, such as simulated latency, packet loss, or bandwidth limits. This is crucial for testing real-world performance and reliability. For example, if I’m building a mobile app, I simulate 3G and 4G networks to observe app behavior under slower connections. Tools like Charles Proxy, Wireshark, or WAN emulators help simulate production-like traffic conditions.

Environment Management in Projects

In modern software projects, test environments are often managed as code using Infrastructure as Code (IaC) tools like Terraform, Ansible, or Docker Compose. This allows automated setup, teardown, and replication of environments across different stages—development, testing, staging, and production. I also integrate environment monitoring tools to detect configuration drifts, hardware bottlenecks, or service downtime.

Stable test environments reduce test flakiness, speed up debugging, and support effective continuous integration/continuous delivery (CI/CD). They are a foundation for trustworthy test results and play a key role in building high-quality, resilient software.

Write Detailed Test Case Specifications

Each test case gets a detailed specification to ensure

- clarity,

- consistency, and

- traceability

across the software testing process. In software projects—especially those involving agile sprints, cross-functional teams, or regulatory compliance—well-written test cases act as both quality control tools and knowledge-sharing assets. They allow QA engineers, developers, business analysts, and even stakeholders to understand exactly what is being tested and why.

Include All Essential Elements

A strong test case includes the following components:

- Test case ID (for traceability)

- Title (descriptive and concise)

- Preconditions (system state before execution)

- Test steps (sequential actions to perform)

- Input data (including data types and value ranges)

- Expected results (precise, verifiable outcomes)

- Postconditions (optional: expected state after execution)

- Priority and test type (functional, regression, smoke, etc.)

This level of detail helps catch subtle bugs and ensures consistent execution—especially important in automated test scripts or when onboarding new QA team members.

Example: Login Test Case

Here’s how I specify a basic login test case in a structured format:

Test Case ID: TC-001

Title: Valid Login Redirects to Dashboard

Preconditions:

- User account exists in database

- Browser is on the login page

Steps:

- Enter valid username and password

- Click “Login”

Input Data:

- Username: testuser @ example . com

- Password: Secure123!

Expected Result:

- User is redirected to the dashboard

- Dashboard loads with personalized welcome message

Importance in Development and Agile Projects

In agile development, test case specifications often link directly to user stories or acceptance criteria in tools like JIRA, Azure DevOps, or TestRail. This enables traceability from requirement to validation. Well-written test cases are also vital for regression testing in continuous delivery environments, ensuring that newly developed features don’t break existing functionality.

In regulated industries like finance or healthcare, test cases serve as formal documentation for audits and compliance reviews. In test automation projects, detailed manual test cases become the blueprint for reliable automated scripts in tools like Selenium, Cypress, or Playwright.

Develop the Test Plan

Now that I know what to test, I create a structured and actionable test plan. In any software development project—whether agile, waterfall, or hybrid—the test plan acts as a roadmap that aligns testing activities with the overall project timeline and business goals. It turns strategy into execution by organizing scope, responsibilities, schedules, and resource allocation. A well-developed test plan minimizes surprises, improves team coordination, and keeps everyone focused on delivering a quality product.

Schedule Tests with Start and End Dates

I define start and end dates for all test activities, ensuring alignment with development sprints, release cycles, or project milestones. This includes:

- Unit test periods during development

- Integration testing as features are combined

- System testing near code freeze

- User acceptance testing (UAT) before release

These dates are often linked to larger release plans or PI planning in SAFe frameworks. Accurate scheduling allows early detection of resource conflicts or timeline risks.

Include Milestones and Responsibilities

My test plan outlines key milestones, such as:

- Environment readiness

- Completion of test case design

- Test execution start and end

- Defect triage deadlines

- Test report delivery

For each milestone, I assign roles and responsibilities: who writes which test cases, who executes them, who analyzes results, and who owns defect resolution. This often includes developers, QA engineers, product owners, and automation testers. Using tools like TestRail, Zephyr, or Xray, I link each task to responsible individuals, keeping accountability clear.

Break Large Tasks into Smaller Chunks

To manage complexity and avoid last-minute panic, I break large tasks into smaller, trackable units. For example, instead of “complete system testing,” I create subtasks for:

- Test the login module

- Validate payment workflows

- Execute performance scripts

- Check edge cases for form validation

This approach supports agile planning and makes progress easier to measure. It also enables better risk assessment and task reallocation if priorities shift. Integrating test planning into tools like JIRA or Asana helps visualize task breakdown and keeps the entire dev and QA team in sync.

Value in Software Projects

In software projects, a well-crafted test plan brings

- visibility to stakeholders,

- improves risk management, and

- supports compliance (where required).

- It also prepares the team for scalability—whether testing a simple web app or a complex enterprise solution.

As projects grow or shift direction, the test plan becomes a living document, updated to reflect changes in scope, timelines, or requirements.

Organize Roles and Responsibilities

Clear roles and responsibilities are essential for smooth collaboration in any software project. When team members understand their specific testing duties, it prevents confusion, reduces overlaps, and accelerates issue resolution. This is especially important in agile or DevOps environments, where cross-functional teams must work in sync to deliver high-quality software under tight deadlines.

Assign Responsibilities Across the Testing Workflow

I assign each team member a specific testing responsibility based on their expertise and the phase of testing. Here’s a typical breakdown in a software development project:

- Test Manager

Oversees the overall test process, defines the test strategy, monitors progress, manages risks, and reports to stakeholders. Acts as the bridge between QA, development, and product management. - Testers (QA Engineers)

Design and execute test cases, log and track defects, perform exploratory testing, and verify bug fixes. In agile teams, testers often participate in refinement sessions and story grooming to define test acceptance criteria. - Automation Engineers

Build and maintain automated test scripts for regression, performance, or API testing using tools like Selenium, Cypress, or Postman. They integrate automated tests into the CI/CD pipeline. - Developers

Fix defects, write unit tests, support integration testing, and often pair with QA to reproduce complex issues. They’re also involved in code reviews and test-driven development (TDD) where applicable. - Product Owner / Business Analyst

Validate that test cases align with business requirements, clarify edge cases, and help prioritize defects based on business impact.

Benefits in Agile and Large-Scale Projects

In agile teams, roles may be flexible but responsibilities must still be clearly documented—especially when working with distributed teams or external testers. Using a RACI matrix (Responsible, Accountable, Consulted, Informed) helps clarify who owns what. In larger or enterprise-scale software projects, formal role documentation helps coordinate across departments and ensure compliance with standards like ISO, CMMI, or ISTQB best practices.

Everyone Knows Their Job

When responsibilities are clearly assigned:

- Testers aren’t waiting for vague instructions

- Developers know when and how to respond to bugs

- Test managers can forecast workloads and timelines accurately

- Stakeholders know who to contact for specific concerns

This clarity boosts productivity, reduces delays, and improves overall software quality assurance. It also makes onboarding smoother and helps during audit reviews or project handovers.

Build the Test Infrastructure

Finally, I create a strong and scalable test infrastructure—the foundation for efficient and reliable testing. In modern software development projects, especially those following agile, DevOps, or continuous delivery practices, the test infrastructure must support fast feedback, automation, and collaboration across teams. A well-built infrastructure reduces test flakiness, shortens feedback loops, and enhances software quality at every stage of the SDLC.

Test Systems

These are the environments where I run my tests. I typically use clones of the production system or highly realistic staging environments to catch issues that users might face in the real world. This includes:

- OS and browser configurations

- Network settings (e.g., VPNs, proxies)

- Backend integrations (e.g., databases, APIs, services)

- Load balancers, firewalls, and service discovery layers (in microservices projects)

In cloud-native projects, these systems are provisioned dynamically using Infrastructure as Code (IaC) tools like Terraform, Docker, or Kubernetes, and maintained using CI/CD pipelines to ensure consistency and speed.

Test Data

I define what test data is required for meaningful and thorough test execution. I ensure data represents real-world scenarios, includes edge cases, and respects privacy and compliance rules (e.g., GDPR, HIPAA).

- For functional tests, I use anonymized or synthetic customer data to simulate actual usage.

- For performance tests, I generate large datasets to measure response under load.

- For boundary testing, I include invalid formats, empty values, and extreme conditions.

In automated environments, I often implement data provisioning scripts and data cleanup tools to reset test environments between runs, ensuring repeatability and isolation.

Test Tools

I list all test tools I use, ensuring team members know what’s available, how to access it, and where documentation lives. These typically include:

- Selenium / Cypress / Playwright – for UI automation

- JIRA / Azure DevOps / Xray – for defect tracking and test management

- Postman / REST Assured – for API testing

- JMeter / Locust – for performance testing

- GitHub Actions / Jenkins / GitLab CI – for automated test execution

I maintain internal wikis or tool directories that describe login credentials (secured), setup instructions, test report locations, and best practices for usage. This promotes transparency, reduces onboarding time, and encourages tool standardization across projects.

Value in Software Projects

A strong test infrastructure supports scalable testing, rapid releases, and high product reliability. In enterprise or large-scale projects, it also helps manage multiple test environments across parallel teams, enabling concurrent development and testing without conflict. Additionally, integrating monitoring and logging tools (like Grafana, ELK Stack, or Datadog) into the test infrastructure allows real-time analysis and faster debugging.

Final Thoughts

Now you know what test management is. A structured test concept helps me plan and execute tests that are both efficient and effective. When I specify each chapter carefully—from objectives to tools—I reduce risk and deliver higher-quality software. Above all, I save time and improve communication within the team.

If you’re setting up a new test project, don’t skip this step. Specify your test concept with care, and your testing process will thank you later.

What’s Next?!

Now that you understand how a strong test concept sets the foundation for reliable testing, it’s time to explore what happens next. Every test phase has its own purpose, from planning to execution and reporting. Curious how these steps connect to deliver quality software? Continue reading my next article — Test Activities in Software Development — and discover how each activity plays a crucial role in building better, faster, and more dependable systems.

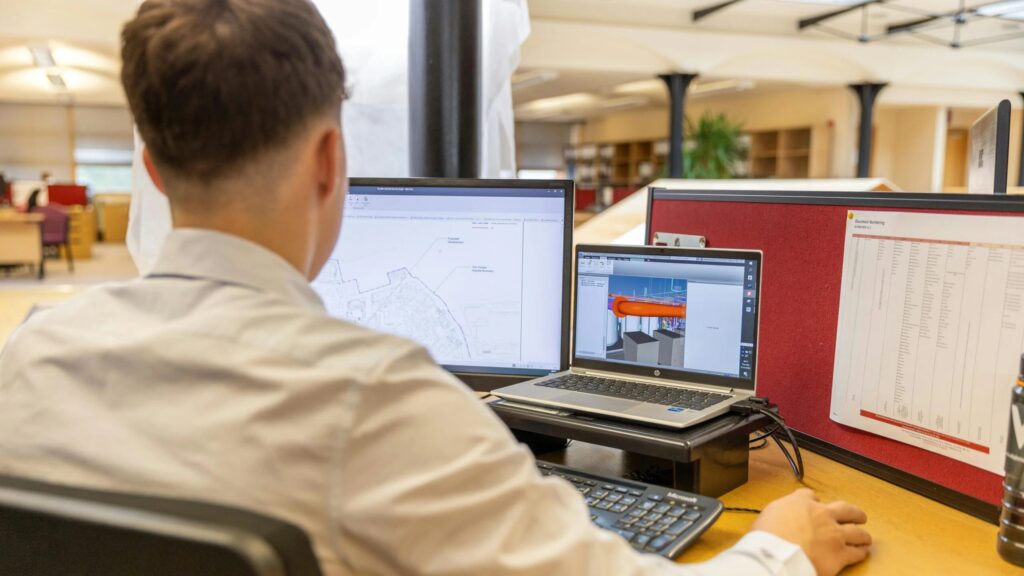

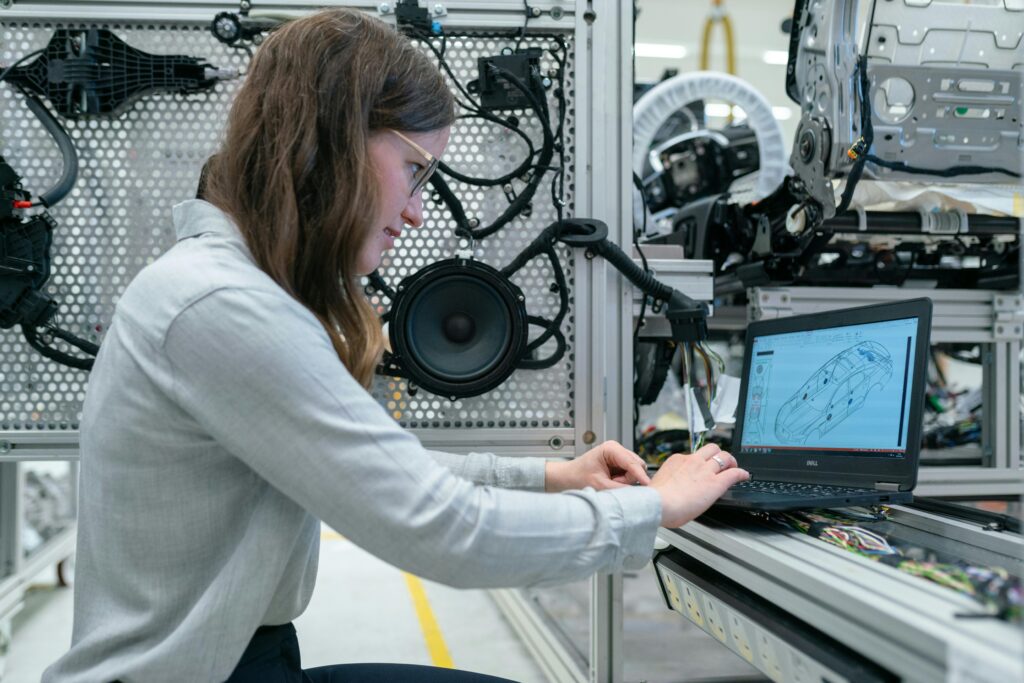

Credits: Photos by ThisIsEngineering from Pexels

| Read mora about draw.io |

|---|

| Import PNG Export JPEG Export WebP Export SVG Export |