What are Encryption Algorithms? A Simple and Clear Guide

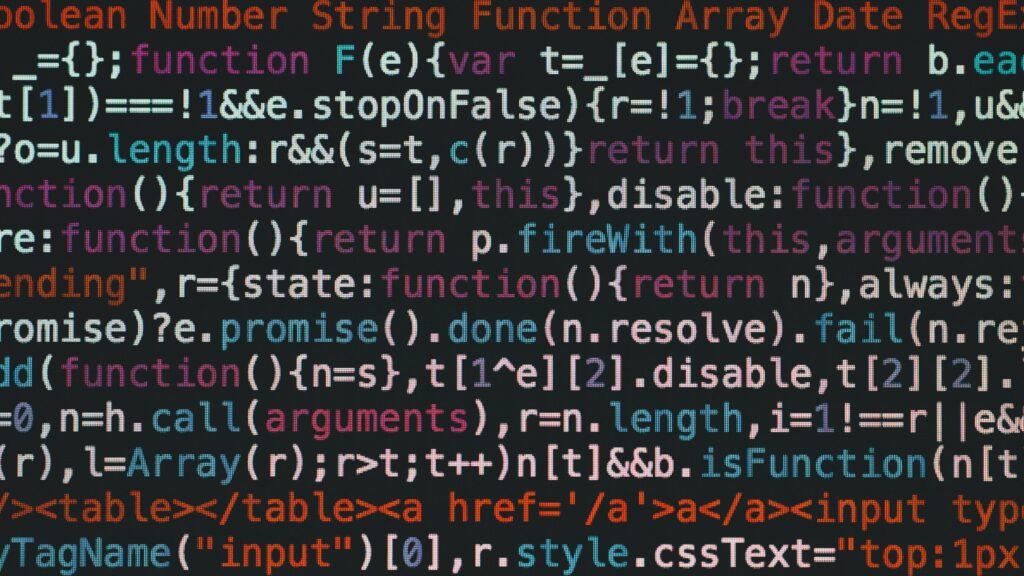

Each time I send a message, upload a file, or shop online, my data moves through many systems. These systems may be secure—or not. That’s why I need a way to protect the content of my messages from prying eyes. Enter encryption. In this article, I explain what is encryption, how it works, why it’s vital for IT security, and what types of encryption exist. I cover everything from simple concepts to real-world techniques. I’ll also show the difference between link and end-to-end encryption, and I explain how modern systems use keys and algorithms to keep your data safe.

What are Encryption Algorithms? A Simple and Clear Guide Read More »